DYNAP™-CNN

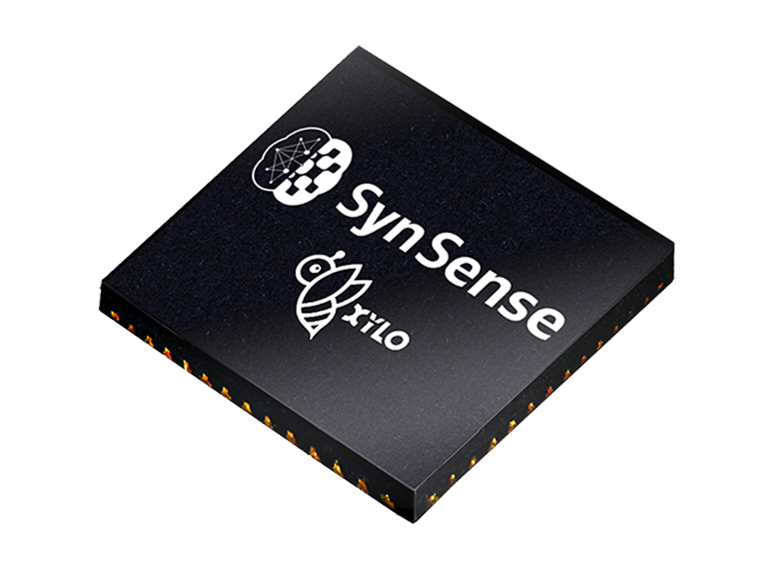

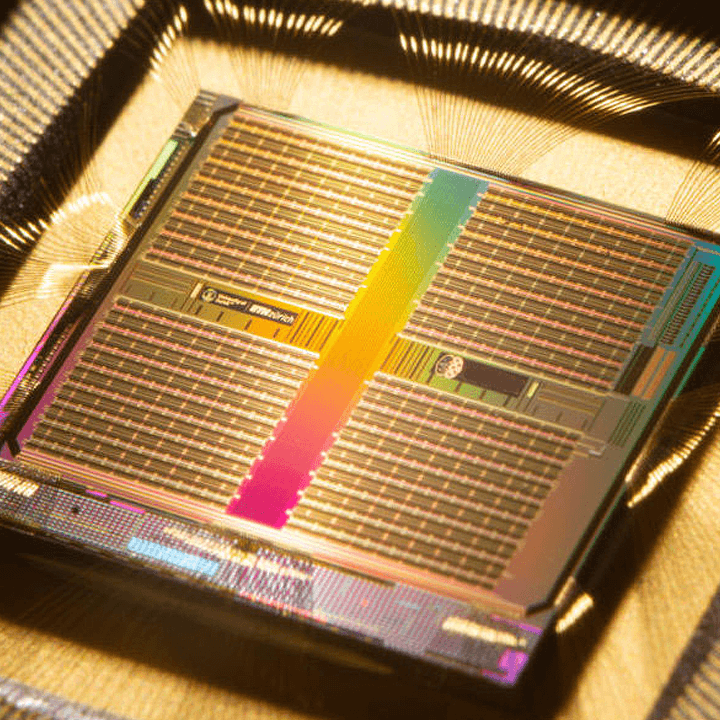

The world’s first fully scalable, event-driven neuromorphic processor with up to 1M configurable spiking neurons and direct interface with external DVS.

DYNAP™-CNN, a fully scalable, event-driven neuromorphic processor, redefines sensory processing. With up to 1M configurable spiking neurons and a direct interface with external DVS, this technology is ideal for ultra-low power, ultra-low latency event-driven applications.

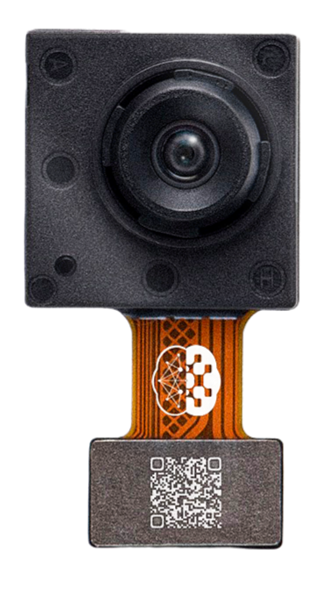

Seamlessly integrating with advanced dynamic-vision sensors, it allows direct input of event streams, enabling rapid prototyping and seamless integration of models. Experience complete control over your models through extensive configurability, supporting various types of CNN layers (ReLU, Cropping, Padding, Pooling) and network models (LeNet, ResNet, Inception).

Additionally, its scalability enables the implementation of deep neural networks across multiple interconnected DYNAP™-CNNs, paving the way for limitless layers.

Applications

- Smart Toy

- Smart Home

- Smart Security

- Autonomous Navigation

- Drones

Scalable

Adaptive to event camera

Ultra-low latency

End-end latency of ms, 10-100x faster

Ultra-low power

100-1000x less power consumption ~ 100mW

Cost effective

Real time data processing, 10x less cost

Always-on

Event-driven computing, no more redundant power management system.

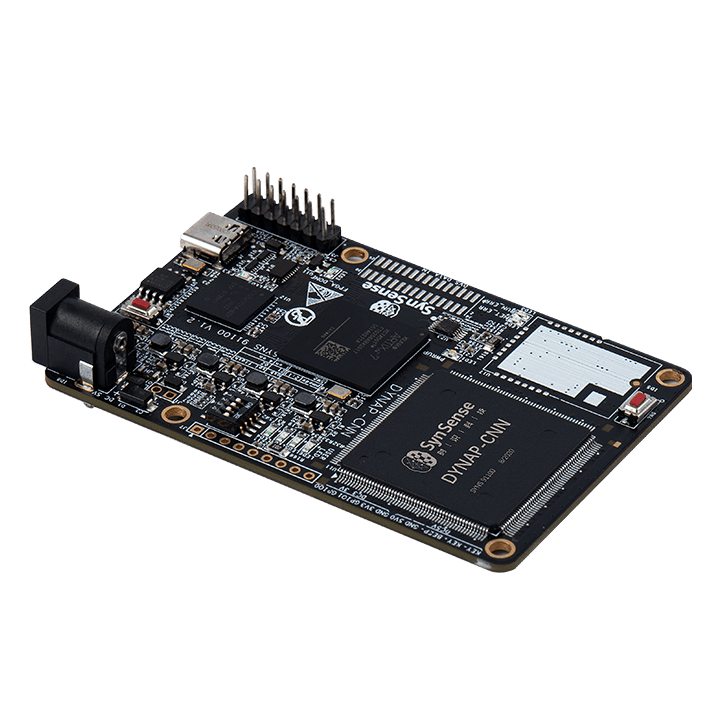

DYNAP™-CNN DEVELOPMENT KIT

The DYNAP™-CNN development kit is powered by SynSense DYNAP™-CNN cores, which brings the flexibility of convolutional vision processing to milliwatt energy budgets. It provides the capabilities for real-time presence detection, real-time gesture recognition, real-time object classification, all with mW average energy use.

The Dev Kit supports event-based vision applications via direct input, or input via USB. Development of up to nine-layer convolutional networks is made easy with our open-source Python library SINABS.

Requirements